The Issue

If you are trying to access workspace files or files located in a repository folder (as described here) from a shared cluster in Databricks, you might run into the following error:

java.lang.SecurityException: User does not have permission SELECT on any file.

The underlying problem has to do with the restrictions you are facing when using shared clusters in Databricks.

If you want to access Unity Catalog with a cluster the only two options regarding Access Mode currently are Shared or Single User though. If the latter is out of the question as it often happens in projects that i am participating in, then you will not be able to access workspace files from the only cluster option that is left.

The Solution

One way of dealing with this problem is to use Databricks Python SDK.

(1) Install the SDK on your Cluster

First you need to install the SDK on your current cluster. There are many ways to install libraries on your cluster, the easiest one is to add this line of code in a notebook cell of its own:

1

%pip install databricks-sdk --upgrade

(2) Initialize, Connect & Authenticate

Then you need to connect to your workspace and authenticate. In my example i am assuming that you have a PAT stored in a secret scope called keyvault and the secret name is databrickspat. You can also provide a token in the code, but that is never recommended.

1

2

3

4

5

6

from databricks.sdk import WorkspaceClient

w = WorkspaceClient(

host = spark.conf.get("spark.databricks.workspaceUrl"),

token = dbutils.secrets.get(scope="keyvault", key="databrickspat")

)

(3) Interact with Workspace Files

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

# List DBFS with dbutils

w.dbutils.fs.ls("/")

# ...or with 'dbfs'

for file_ in w.dbfs.list("/"):

print(file_)

# List workspace files of a user

for file_ in w.workspace.list("/Users/<username>", recursive=True):

print(file_.path)

# List repository files of a user

for file_ in w.workspace.list("/Repos/<username>/<repo>"):

print(file_.path)

# Get contents of a yaml file stored in Repos/...

for line in w.workspace.download(path="/Repos/<username>/<repo>/.../catalog.yml"):

print(line.decode("UTF-8").replace("\n", ""))

# Upload a (text) file to user repository folder

import base64

from databricks.sdk.service import workspace

path = "/Repos/<username>/<repo>/.../test.yml"

w.workspace.import_(content=base64.b64encode(("This is the file's content").encode()).decode(),

format=workspace.ImportFormat.AUTO,

overwrite=True,

path=path

)

Some Example Screenshots

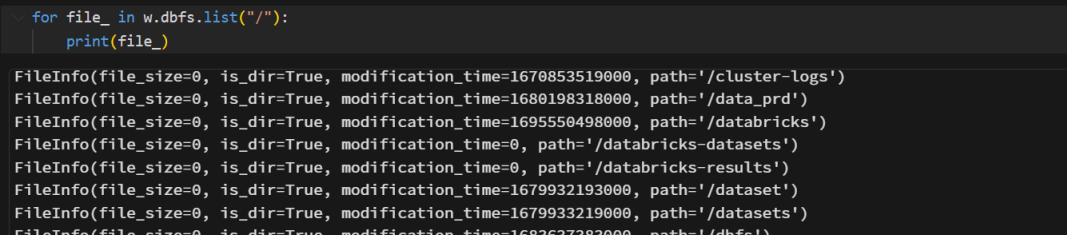

List files in DBFS:

List files in User/Workspace:

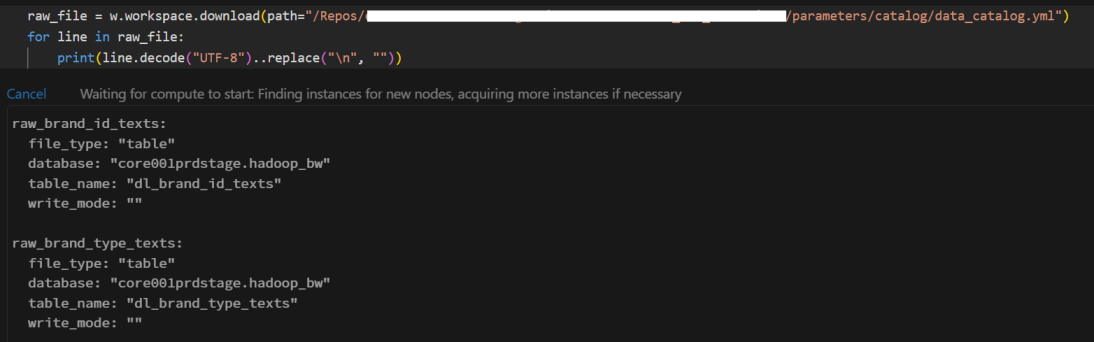

Show contents of a yaml file saved in user’s repository folder: